Are using superfluous columns, in a SELECT list, bad? Sure they are and for a multitude of reasons. The better question is, why do developers still use superfluous columns, knowing that they should not? I believe developers get tunnel vision and forget to look at the big picture, perhaps it is laziness, or perhaps the developer does not care because company policy is to fix performance problems with bigger hardware, which is common these days. For whatever the reason, tunnel vision and laziness usually lead to rapidly developed, non-performant code. Developers often forget the overall impact bad code can have on a system, no matter how simple. The impact depends on varying factors including: access frequency, bytes returned, query performance etc... I am going to explore why superfluous columns are not a best practice.

Let’s get started by creating a sample table with a million rows.

USE [tempdb]

GO

IF OBJECT_ID('test..t') IS NOT NULL

DROP TABLE t

GO

SELECT TOP 1000000 --<<<LOOK! CHANGE THIS NUMBER TO CHANGE THE NUMBER OF ROWS!

RowNum = IDENTITY(INT,1,1),

SomeID = ABS(CHECKSUM(NEWID()))%2500+1, --<<<LOOK! CHANGE THIS NUMBER TO 1/400th THE ROW COUNT

SomeCode = CHAR(ABS(CHECKSUM(NEWID()))%26+65)

+ CHAR(ABS(CHECKSUM(NEWID()))%26+65),

SomeCode2 = CHAR(ABS(CHECKSUM(NEWID()))%26+65)

+ CHAR(ABS(CHECKSUM(NEWID()))%26+65)

+ CHAR(ABS(CHECKSUM(NEWID()))%26+65)

+ CHAR(ABS(CHECKSUM(NEWID()))%26+65),

SomeCode3 = CHAR(ABS(CHECKSUM(NEWID()))%26+65)

+ CHAR(ABS(CHECKSUM(NEWID()))%26+65)

+ CHAR(ABS(CHECKSUM(NEWID()))%26+65)

+ CHAR(ABS(CHECKSUM(NEWID()))%26+65)

INTO dbo.t

FROM Master.dbo.SysColumns t1,

Master.dbo.SysColumns t2

--===== Add the index to support both types of queries

CREATE INDEX IXC_TestData_Cover1 ON dbo.t(SomeID,SomeCode)

GO

Now that we have our table and test data, let’s run a simple query using select * and another select statement that uses fewer columns. You should enable client statistics to view the bytes returned to the client.

Note: You can enable this via the toolbar, shown below, or by clicking the option from the query menu.

First run the query with superfluous columns ( Make sure you enable client statistics)

SELECT *

FROM dbo.t

WHERE

someid < 500

GO

Now let’s run the query with fewer columns:

SELECT

RowNum,

SomeID,

SomeCode

FROM dbo.t

WHERE

someid < 500

GO

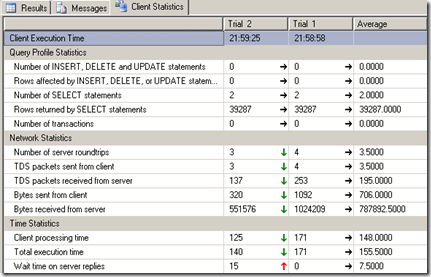

The client statistics between the two runs should look similar to the screenshot below. You will notice that the bytes received from the server has drastically decreased, for the query with fewer columns in the SELECT list. The bytes sent from client is the number of bytes that make up the query text, which is irrelevant to our tests.

The value of interest is Bytes received from server. This represents the number of bytes to the client, from the server. In our case, the bytes dropped from 1024209 to 551576. To put the number of bytes reduced into perspective, lets crunch the numbers. We know that the superfluous query used 1024209 bytes. We can transform this into the number of MBs returned. We are going to base these numbers on a concurrent user load of 500 users. Our calculation becomes. ((Number of bytes * number of concurrent users) / 1024.0) / 1024.0. The result of this calculation is shown below.

|

Bytes |

KB |

MB |

|

|

Superfluous Columns |

512104500 |

500102.1 |

488.381 |

|

Non-Superfluous Columns |

275788000 |

269324.2 |

263.012 |

As you can see, the number of bytes returned by the superfluous query is over 200 MB larger. This may not seem like much, but this is only one query and imagine if you have a 1000 concurrent users. The superfluous column query would return approximately 500MB more data. This means the superfluous query would return nearly 1GB of data… now that is huge!

By selecting the columns we need, we are able to reduce the amount of data the web server has to process, which can lead to faster load times, less consumed resources, and a better end user experience.

So, your probably sold on the idea that superfluous columns are bad, but I have one more point to make. Not only does the query return more bytes, but superfluous columns can affect the optimizer’s ability to use a covering index, even in a derived table/CTE.

Using the same sample, with a slight modification.

Superfluous query:

SELECT *

FROM dbo.t

WHERE

someid = 100

GO

Below is the query plan. What you see is that our index was able to be used; however, the index does not contain all the columns in our SELECT list, thus the optimizer has to go back to the HEAP to get the remaining column data.

Note: depending on the indexes in place the RID look could be replaced by a Key Lookup, if a clustered index exists.

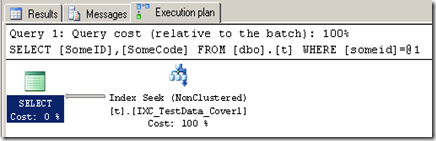

Here is the non-superfluous column query:

SELECT

SomeID,

SomeCode

FROM dbo.t

WHERE

someid = 100

GO

These are the results from the query with non-superfluous columns:

You can test the same results with a CTE:

--this yeilds an index RID lookup

;WITH cte

AS

(

SELECT

[RowNum]

[SomeId],

[SomeCode],

[SomeCode2],

[SomeCode3]

FROM t

WHERE

[SomeId] = 100

)

SELECT

[SomeId],

[SomeCode]

FROM cte

--this yields an index seek via covering index

;WITH cte

AS

(

SELECT

[SomeId],

[SomeCode]

FROM t

WHERE

[SomeId] = 100

)

SELECT

[SomeId],

[SomeCode]

FROM cte

Results:

Superfluous columns:

Non-superfluous columns:

So there you have it. Selecting more columns than you need, not only affects application performance, it affects the number of bytes returned to the client. All of these factors can directly affect the user’s experience, which should be very important to developer/DBA staff. Additionally, superfluous columns can cause bookmark lookups, which will degrade database performance.

1 comment:

I believe you may also know about how to repair a sql database

Post a Comment